In this blog post, I am going to develop a basic formalism of one of my favourite computer science problems - the problem of maximum flow in a flow network.

The fact that this problem can be expressed in relatively abstract language of graph theory makes it a very powerful tool in algorithmic toolbox, because many

seemingly different problems can be reduced to it. Apart from its practical importance, this problem setting can be used to prove some other results in graph theory,

for instance the Hall’s marriage theorem in a very intuitive way. Some other applications of this problem are counting edge-disjoint paths in polynomial time (yes, that is possible 😀),

computing maximal bipartite matching…

In this post I am going to derive basic formalism of this problem and prove the famous MaxFlow = MinCut theorem:

The value of maximum flow in a flow network equals the capacity of a minimal cut.

However, before we are able to understand what this actually means, we need to rigorously develop the intuitive notion of flow networks and flows.

Some proof techniques and ideas presented here a mix of the CLRS MaxFlow Chapter 26 and and lecture notes written by Prof. Mayr. All important proofs are mine, but the convenient definition of flow network using skew symmetry constraint is taken from the lecture notes and Wikipedia.

This post is going to be a rather long one and it is divided in three large parts:

- Theory of flow networks and network flows - in this section we carefully and rigorously build definitions and series of lemmas from which we gain a strong background in the difficult problem we are dealing with

- The Max-Flow Min-Cut Theorem - in this section we state and prove the core theorem which will make us confident enough that we can build a correct ‘fast enough’ algorithm for the maximum flow problem. Moreover, the algorithm we develop will be completely natural after the section Theory of flow networks and network flows. One of the reasons why this post exists is simply the feeling that everyone knowing Theory of flow networks and network flows could invent such an algorithm.

- Ford Fulkerson’s Algorithm - we develop the famous Ford Fulkerson’s Algorithm and prove it is correct and ‘fast enough’. Moreover, this algorithm is a canonical example of algorithmic technique know as ‘incremental improvement’ which is a very general algorithm design paradigm.

Theory of flow networks and network flows

Definition of a Flow Network

A flow network is a four-tuple (G,c,s,t) such that:

G=(V,E) is a directed graph with two distinguished vertices, a source vertex s and a sink vertex t.

c:V×V→R is a capacity function such that:

- ∀(u,v)∈E,c(u,v)≥0

- ∀(u,v)∈E,c(u,v)=0

Furthermore, we assume that each vertex is on some path from s to t. In other words, we require a flow network to be connected, but not necessarily strongly connected.

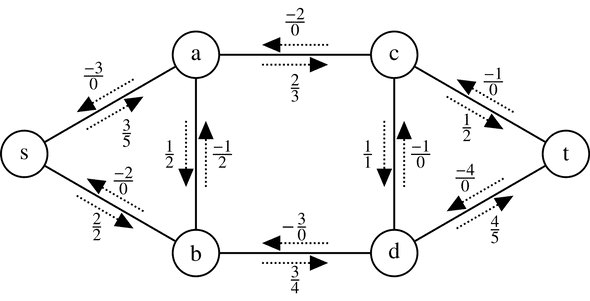

Usually, we visualise flow networks as directed graphs where each edge has a weight representing the capacity function value at that edge.

For a real world analogy it is useful to think of flow networks as pipelines where each corner of pipeline is a flow network vertex and

each pipe in a pipeline represents an edge with some capacity.

Definition of a Network Flow

Let N=(G=(V,E),c,s,t) be a flow network as defined above.

Then a network flow f is a function f:V×V→R satisfying following conditions:

Capacity constraint: ∀(u,v)∈V×V,f(u,v)≤c(u,v)

Intuitively, the flow in some pipe should not exceed capacity of the pipe.

Skew symmetry: ∀(u,v)∈V×V,f(u,v)=−f(v,u)

Intuitively, the positive flow in one direction induces a negative flow in the opposite direction

Flow conservation: ∀u∈(V−{s,t}),∑v∈Vf(u,v)=0

Intuitively, the net inflow at corner where pipes meet equals the net outflow from that corner, except at source vertex which provides the flow to the whole network and sink vertex which absorbs all the incoming flow. Observe that due to skew symmetry, this formulation of flow conservation captures the intuitive meaning that net flow to u is equal to net flow out of u.

We define the value of flow ∣f∣ to be ∣f∣ = ∑v∈Vf(s,v). Hence the value of flow is the net inflow from the source vertex to entire network.

Definition of a Max Flow Problem

Given the flow network N, compute the flow function f such that ∣f∣ is maximal amongst all possible flows.

Before we give an algorithm, we will state and prove a series of lemmas which will help us understand the structure of problem we are dealing with.

Here is the first one:

Lemma 1 (Hygiene lemma)

Claim: Let N=(G=(V,E),c,s,t) be a flow network and let f:V×V→R be a flow function.

Let u,v∈V. Then:

- f(u,u)=0.

- For any v∈(V−{s,t}), ∑u∈Vf(u,v)=0.

- If (u,v)∈E and (v,u)∈E then f(u,v)=f(v,u)=0.

Proof:

- By the skew symmetry, f(u,u)=−f(u,u). Then 2f(u,u)=0 so f(u,u)=0.

- By the skew symmetry, we have ∑u∈Vf(u,v)=∑u∈V−f(v,u).

Now rewrite as ∑u∈Vf(u,v)=−∑u∈Vf(v,u).

By the flow conservation, ∑u∈Vf(v,u)=0.

But then ∑u∈Vf(u,v)=0, as claimed.

- Since (u,v)∈E, we have c(u,v)=0.

Since (v,u)∈E, we have c(v,u)=0.

By the skew symmetry, f(u,v)=−f(v,u).

Since f is a flow, f(u,v)≤c(u,v)=0.

Since f is a flow, f(v,u)≤c(v,u)=0. Then −f(v,u)≥0.

But then f(u,v)=−f(v,u)≥0.

Since f(u,v)≤0 we conclude f(u,v)=0.

Hence f(u,v)=−f(v,u)=0, as claimed.

This completes the proof. ■

Definition of flows on sets

After we have demonstrated that some intuitive properties of flow holds, we will define action of flows on sets.

This will simplify later proofs a lot because we will be able to avoid many double summations and the whole mess which would otherwise arise.

So, let X,Y⊆V. Then define f(X,Y)=∑u∈X∑v∈Yf(u,v).

Now we will define a bit of notational abuse:

- Let f(u,Y) mean f(u,Y)=f({u},Y)

- Let f(X,y) mean f(X,y)=f(X,{y})

Observe that we can express the flow conservation in single statement

∀u∈(V−{s,t}),f(u,V)=0.

Now, we will generalise observations stated above to the set notation.

Lemma 2 (Lifting lemma)

Claim: Let N=(G=(V,E),c,s,t) be a flow network and let f:V×V→R be a flow function.

Let X,Y,Z⊆V. Then:

- f(X,X)=0.

- f(X,Y)=−f(Y,X).

-

If X and Y are disjoint then:.

- f(X∪Y,Z)=f(X,Z)+(Y,Z)

- f(X,Y∪Z)=f(X,Y)+(X,Z)

Proof:

- By the claim below, f(X,X)=−f(X,X).

Now by adding f(X,X) to the right side we obtain 2f(X,X)=0 which implies f(X,X)=0.

- We have:

f(X,Y)=u∈X∑v∈Y∑f(u,v)=u∈X∑v∈Y∑−f(v,u)=−u∈X∑v∈Y∑f(v,u)=−v∈Y∑u∈X∑f(v,u)=−f(Y,X)(By definition)(By the skew symmetry)(By interchanging the summation order)(By definition)

- We have:

f(X∪Y,Z)=u∈X∪Y∑z∈Z∑f(u,z)=u∈X∑z∈Z∑f(u,z)+v∈Y∑z∈Z∑f(v,z)−u∈X∩Y∑z∈Z∑f(u,z)=u∈X∑z∈Z∑f(u,z)+v∈Y∑z∈Z∑f(v,z)=f(X,Z)+f(Y,Z)(By definition)(Since X and Y are disjoint.)(By definition)

Now, we can easily prove the last statement by reusing the previously proved one and applying the ‘Lifting Lemma’.

f(X,Y∪Z)=−f(Y∪Z,X)=−(f(Y,X)+f(Z,X))=−f(Y,X)−f(Z,X)=f(X,Y)+f(X,Z)(By the Lifting Lemma - 2)(By the previous statement)(By the Lifting Lemma - 2)

This completes the proof. ■

Now we prove the important observation about the flow value. By the strict skew symmetry and capacity constraints, it is intuitive to imagine that the flow value is equal to the outflow of network to the sink vertex.

Lemma 3 (Alternative definition of the flow value)

Claim:: Let N=(G=(V,E),c,s,t) be a flow network and let f:V×V→R be a flow function. Then ∣f∣=f(V,t).

Proof:

∣f∣=f(s,V)=f(V,V)−f(V−{s},V)=−f(V−{s},V)=−(f(V−{s,t},V)+f(t,V))=−f(t,V)=f(V,t)(By definition of flow value)(Since V=(V−{s})∪{s},Lifting Lemma - 3)(By the Lifting Lemma - 1, f(V,V)=0)(Since V−{s}=(V−{s,t})∪{t},Lifting Lemma - 3)(By the Hygiene Lemma - 2 in language of Lifting Lemma)(By the Lifting Lemma - 2)

This completes the proof. ■

Many maximum flow algorithms rely on the idea of residual network induced by some flow f.

The purpose of residual network is to capture the potential amount of flow that can be added after the flow f is applied.

It is therefore important to rigourously define what we mean by ‘potentially more flow’ can be added to some edge.

Definition of Residual Networks

Let N=(G=(V,E),c,s,t) be a flow network as defined above.

Let f be a network flow in N. Then the residual network Nf is a four tuple (Gf=(V,Ef),cf,s,t) where:

- cf is a function cf:V×V→R defined as:

∀(u,v)∈V×V,cf(u,v)=c(u,v)−f(u,v)

- Ef={(u,v)∣(u,v)∈V×V such that cf(u,v)>0}

Observe that residual capacities induce edges that may not exist in the original network N.

Those edges are usually named back edges. An example where that may happen is when f(u,v)=c(u,v) and (v,u) does not exist in E.

By the skew symmetry, f(v,u)=−f(u,v).

Now cf(v,u)=c(v,u)−f(v,u)=0−(−f(u,v))=f(u,v). This implies that residual network will get an edge of residual capacity f(u,v) which did not exist in the original network.

Moreover, many maximum flow algorithms rely on the idea of ‘adding’ or ‘augmenting’ flows to other previously computed flows. It is very important to make that idea precise.

Lemma 4 (Augmentation lemma)

Claim: Let N=(G=(V,E),c,s,t) be a flow network and let f:V×V→R be a flow function.

Let Nf be the residual network induced by flow f. Let g:V×V→R be a flow function defined with respect to the residual network Nf. Then f+g:V×V→R defined as ∀(u,v)∈V×V,(f+g)(u,v)=f(u,v)+g(u,v) is a network flow in the original network N with capacity ∣f+g∣=∣f∣+∣g∣.

Proof:

In order to prove this claim, we need to prove two subclaims:

- f+g is a network flow in N

- ∣f+g∣=∣f∣+∣g∣

We will firstly prove 1.

We will prove by checking that capacity constraint, skew symmetry and flow conservation properties hold in the original network N.

Let (u,v)∈V×V.

Then, we have:

(f+g)(u,v)=f(u,v)+g(u,v)≤f(u,v)+cf(u,v)=f(u,v)+c(u,v)−f(u,v)=c(u,v)(By definition of f + g)(Since g is a flow in Nf)(By definition of cf(u,v))

This proves the capacity constraint.

Now check the skew symmetry:

(f+g)(u,v)=f(u,v)+g(u,v)=−f(v,u)−g(v,u)=−(f(v,u)+g(v,u))=−(f+g)(v,u)(By definition of f + g)(Since f is a flow in N and g is a flow in Nf)(By definition of cf(u,v))(By definition of f + g)

This proves the skew symmetry.

For the flow conservation, start from definition. Let u∈V−{s,t}.

Then:

v∈V∑(f+g)(u,v)=v∈V∑[f(u,v)+g(u,v)]=v∈V∑f(u,v)+v∈V∑g(u,v)=0+0=0(By definition of f + g)(Splitting summations)(Since f is a flow in N and g is a flow in Nf)

This prove the flow conservation property.

This proves the function f+g is indeed a flow in N.

Now let us compute its value:

∣f+g∣=v∈V∑(f+g)(s,v)=v∈V∑[f(s,v)+g(v,v)]=v∈V∑f(s,v)+v∈V∑g(s,v)=∣f∣+∣g∣(By definition of f + g’ s value)(By definition of f + g)(Splitting summations)(Since f is a flow in N and g is a flow in Nf)

This completes the proof. ■

Now we introduce the important idea of augmenting paths which are the key part of many network flow algorithms.

Augmenting paths capture the notion of computing the amount by which the current flow can safely be augmented.

Definition of Augmenting paths in flow networks

Let N=(G=(V,E),c,s,t) be a flow network and let f:V×V→R be a flow function.

Let Nf be the residual network induced by flow f.

Then an augmenting path for f is a simple path π from source vertex s to the sink vertex t in the residual network Nf

induced by flow f. The residual capacity of the augmenting path π is the value cf(π) defined as:

cf(π)=min{cf(u,v)∣(u,v)∈π}.

Remark: Observe that, by definition of Ef, cf(u,v)>0 for every (u,v)∈π. Hence cf(π)>0 and this observation will be very important in following sections.

In the following very important lemma, we rigorously capture the idea of safely augmenting the current flow with the ‘safe’ value originating from the residual network.

Lemma 5 (Flow-Path Augmentation lemma)

Claim: Let N=(G=(V,E),c,s,t) be a flow network and let f:V×V→R be a flow function.

Let π be the augmenting path for the flow f. Then fπ:V×V→R defined as:

fπ(u,v)=⎩⎪⎪⎨⎪⎪⎧cf(π)−cf(π)0if (u, v)∈πif (v, u)∈πotherwise

is a flow in the residual network Nf of value cf(π).

Proof:

In order to prove this claim, we need to prove two subclaims:

- fπ is a network flow in Nf

- ∣fπ∣=cf(π)

Let us check the properties of network flow.

Let (u,v)∈Ef.

Then:

fπ(u,v)≤max{cf(π),−cf(π),0}=cf(π)≤cf(u,v)(By definition of fπ)(By definition of cf(π))

This establishes capacity constraint.

Let us consider the skew symmetry constraint.

Suppose (u,v)∈π.

- Then fπ(u,v)=cf(π). But then fπ(v,u)=−cf(π) since (u,v)∈π.

Therefore fπ(u,v)=−fπ(v,u), as desired.

Suppose (u,v)∈π.

- Then either (v,u)∈π or (v,u)∈π. If (v,u)∈π the claim holds by symmetry to the previous case.

Otherwise we have fπ(u,v)=−fπ(v,u)=0 by definition of fπ.

This establishes the skew symmetry.

Let u∈V−{s,t}. Now we expand the definition of flow conservation:

v∈V∑fπ(u,v)=v∈V,(u,v)∈π∑cf(π)+v∈V,(v,u)∈π∑−cf(π)+v∈V,(u,v)∈π and (v,u)∈π∑0=v∈V,(u,v)∈π∑[cf(π)−cf(π)]=0(By definition of fπ)(By observing that for every (u, v) terms appear twice )

This establishes the flow conservation property.

Therefore fπ is indeed a network flow in Nf.

Now we compute the flow value from definition.

∣fπ∣=v∈V∑fπ(s,v)=fπ(s,w)=cf(π)(By definition offπ’s value)(Since π is a simple path, there is only one edge from s, (s, w))(By definition offπ)

This completes the proof. ■

Now we rigorously introduce the idea that augmenting current flow with a flow arising from augmented path can only improve the current flow.

Lemma 6 (Fundamental Estimate of Augmenting flows)

Claim: Let N=(G=(V,E),c,s,t) be a flow network and let f:V×V→R be a flow function.

Let π be the augmenting path for the flow f. Then f+fπ:V×V→R is a flow in the original flow network N with the value of ∣f∣+cf(π)>∣f∣.

Proof:

By the Flow-Path Augmentation lemma, fπ is a flow in the residual network Nf with value ∣fπ∣=cf(π).

By the Augmentation lemma, f+fπ is the flow in the original flow network N with the value of ∣f∣+cf(π)>∣f∣.

This completes the proof. ■

Lastly we introduce the last formal idea of a graph cut.

Definition of the graph cut

Let N=(G=(V,E),c,s,t) be a flow network and let f:V×V→R be a flow function.

Then a cut of N is a pair of sets (S, T) such that:

- s∈S and t∈T

- V=S∪T,S∩T=∅

Now using the definition of a capacity of an edge in N, we lift that definition to the definition of capacity of the cut in the following way.

We define the capacity of a cut (S,T) to be: c(S,T)=∑u∈S,v∈Tc(u,v).

It is useful to think about capacity of a cut (S,T) as the pipe capacity through the border between two disjoint parts of the network, S and T.

Now we are coming to the important lemma which will help us prove the MaxFlow MinCut Theorem.

Lemma 7 (Relationship between cuts and flows)

Claim: Let N=(G=(V,E),c,s,t) be a flow network and let f:V×V→R be a flow function.

Let (S,T) be the cut of a graph according to the previous definition. Then: ∣f∣=f(S,T).

Remark: Before we give the proof, it is useful to think about the power and generality of the claim we are stating.

We are claiming that flow through any cut in graph ‘precisely matches’ the value of the flow across the whole network.

Proof:

We will argue using the Lemma 3, Alternative Definition of flow value.

∣f∣=f(V,t)=f(V,V)−f(V,V−{t})=0−f(V,V−{t})=f(V−{t},V)=f(S∪T)−{t},V)=f(S−{t},V)+f(T−{t},V)=f(S,V)+f(T−{t},V)=f(S,V)=f(S,S)+f(S,T)=f(S,T)(By the alternative definition of flow value)(Since (S, T) is a cut, apply Lifting Lemma - 3)(By Lifting Lemma - 1, f(V, V) = 0)(By Lifting Lemma - 2, skew symmetry)(Since (S,T) is a cut)(Applying set union identity)(Observe that ∀u∈T−{t},u∈(V−{s,t}) the flow conservation applies)(Since (S, T) is a cut, apply Lifting Lemma - 3)(By Lifting Lemma - 1, f(S, S) = 0)

This completes the proof. ■

Now we are ready to state and prove the ultimate bound on the flow value.

Lemma 8 (Fundamental estimate of the flow value)

Claim: Let N=(G=(V,E),c,s,t) be a flow network and let f:V×V→R be a flow function.

Then: ∣f∣ is bounded above by the capacity of any graph cut.

Remark: Observe how general this claim is.

Proof:

Let (S,T) be any graph cut. Then by (Relationship between cuts and flows), we have ∣f∣=f(S,T).

Now expand this definition:

∣f∣=f(S,T)=u∈S∑v∈T∑f(u,v)≤u∈S∑v∈T∑c(u,v)=c(S,T)(Since f is a flow)(By definition of cut capacity)

Since (S,T) was arbitrary, the claim holds for any cut. This completes the proof. ■

Now we state and prove the most important Theorem in this post.

The Max-Flow Min-Cut Theorem

Claim: Let N=(G=(V,E),c,s,t) be a flow network and let f:V×V→R be a flow function.

Then the following three statements are equivalent:

- f is a maximum flow in N

- Residual network Nf contains no augmenting path

- ∣f∣ = c(S,T) for some cut (S,T)

Proof:

We will prove the chain of implications (1⟹2), (2⟹3), (3⟹1).

(1⟹2)

We will prove by contradiction.

Assume that f is a maximum flow in N but there exists an augmenting path of f, say π inside the residual network Nf.

Then, by (Fundamental Estimate of Augmenting Flows), there exists a network flow g inside N such that: ∣g∣=∣f∣+cf(π)>∣f∣.

This contradicts the fact that f is a maximum flow in N. Therefore residual network Nf cannot have an augmenting path.

(2⟹3)

Now assume that the residual network Nf contains no augmenting path. We will deduce that there exists a cut (S,T) such that f = c(S,T).

Firstly, observe that since there is no augmenting path in Nf, vertices s and t are disconnected in Nf.

Now define S, such that S={u∣u∈V, there exists a path from s to u in Nf}. Let T=V−S.

We will prove that (S,T) is a cut.

Since every vertex is either connected to s in Nf or not, we have V=S∪T. Now we claim S∩T=∅.

We will prove by contradiction.

Assume, for the sake of contradiction, that there exists u∈S∩T. Then there exists a path from s to u. But then u∈T, since u is reachable from s. This is a contradiction, since u∈T by construction. Therefore S∩T=∅, as claimed.

Now we claim that s∈S and t∈T. Trivially, s is reachable from s so it must be s∈S. We claim that t∈T.

Since there exists no augmenting path in Nf, vertices s and t are disconnected in Nf, so clearly t∈T.

Now we claim that ∀(u,v) such that u∈S,v∈T,f(u,v)=c(u,v). We will prove by contradiction.

Assume that c(u,v)>f(u,v), since the capacity constraint implies f(u,v)≤c(u,v). Then by definition of cf,

we have that cf(u,v)=c(u,v)−f(u,v)>0. But then, we have that (u,v)∈Ef. However, this implies following:

Since u∈S, there exists a path from s ⇝ u in Nf. Now, since (u,v)∈Ef, there exists a path u⇝v in Nf. Now by composing those two paths, we get that there exists the following path s⇝u⇝v in Nf. Since, v is reachable from s we have v∈S. Since (S,T) is a cut, we deduce v∈T. This is a contradiction,

since v∈T by assumption. Therefore, the assumption that c(u,v)>f(u,v) is impossible, so we deduce c(u,v)≤f(u,v).

By capacity constraint, it must be the case that c(u,v)=f(u,v).

Therefore, ∀(u,v) such that u∈S,v∈T,f(u,v)=c(u,v).

Now consider the flow value ∣f∣. By Relationship between cuts and flows, we have f(u,v)=f(S,T).

Now:

f(u,v)=f(S,T)=u∈S∑v∈T∑f(u,v)=u∈S∑v∈T∑c(u,v)=c(S,T)(By Relationship between cuts and flows)(By definition of the flow from set to set)∀(u,v) such that u∈S,v∈T,f(u,v)=c(u,v)(By definition of cut capacity)

Therefore (2⟹3), as desired.

Now it is left to show that (3⟹1).

Assume that ∣f∣ = c(S,T) for some cut (S,T). By the Fundamental estimate of the flow value, we have that ∣f∣≤c(S,T).

Since ∣f∣ = c(S,T), we deduce ∣f∣ is a maximum possible flow value, so f is a maximum flow.

This completes the proof. ■

Ford-Fulkerson’s Algorithm

Development of the Ford-Fulkerson algorithm

Now we summarise what insight about the maximum flow problem we deduced so far:

- Flow-Path Augmentation lemma (Lemma 5) tells us that we can always safely augment a flow with an augmenting path resulting from residual network induced by that flow. Resulting flow will be a valid flow in the residual network induced by that flow.

- Fundamental estimate of augmenting flows (Lemma 6) tells us that we can always improve the current flow using the augmenting path in the residual network induced by that by simply augmenting the current flow with the augmenting path.

- The Max-Flow Min-Cut Theorem tells us that we can safely stop any computation once we reach a flow for which its induced residual network contains no augmenting path. By the same Theorem, we are sure that the current flow is indeed the maximum flow.

Now the intuition tells us that to solve the maximum flow problem, we can perform the following steps:

- Initialize the current flow f to be trivial flow of value 0.

- While there exists an augmenting path in the network Nf, using the construction of Flow-Path Augmentation lemma, augment the current flow using the augmenting path we found

- When there exists no augmenting path in the network Nf, simply output the flow f value ∣f∣. The result will be the maximum flow!

This is exactly the Ford-Fulkerson Algorithm.

Now we state and prove the last theorem in this post (I promise 😉).

Correctness and Running time of Ford-Fulkerson

Claim: Let N=(G=(V,E),c,s,t) be a flow network. Assume that every capacity in the network is of the integral value.

Then the Ford-Fulkerson Algorithm terminates and correctly computes the maximum flow f⋆ in O(∣f⋆∣) time.

Proof:

Let f⋆ be the maximum flow and let ∣f⋆∣ be its value.

Firstly we need to prove that Ford-Fulkerson Algorithm terminates. Observe that, that the while loop of step 2 performs the exactly the Flow-Path Augmentation lemma (Lemma 5). Hence every iteration of the while loop results in a new valid network flow. Moreover, by Fundamental estimate of augmenting flows, every resulting flow has a strictly greater value than the previous flow. Since the maximum flow has value ∣f⋆∣ and flow from previous step is improved by at least 1 (recall capacities are integral), the while loop of step 2 executes at most ∣f⋆∣ times. Hence the Ford-Fulkerson terminates after ∣f⋆∣ steps at most. Observe that the number of operations that the algorithm performs is bounded above by the work done in the while loop, so we deduce that the running time of Ford-Fulkerson algorithm is O(∣f⋆∣).

Now we need to prove that step 3 outputs the maximum flow. Observe that the algorithm stops after there exists no augmenting path in the residual network induced by the current flow f. Now by The Max-Flow Min-Cut Theorem, the flow f must be the maximal one. Hence ∣f∣=∣f⋆∣.

This completes the proof. ■

In one of the following posts, we will apply the algorithm and theory we developed to solve some seemingly unrelated problems by using reduction to the maximum flow.